Experiment results on Android-in-the-Wild

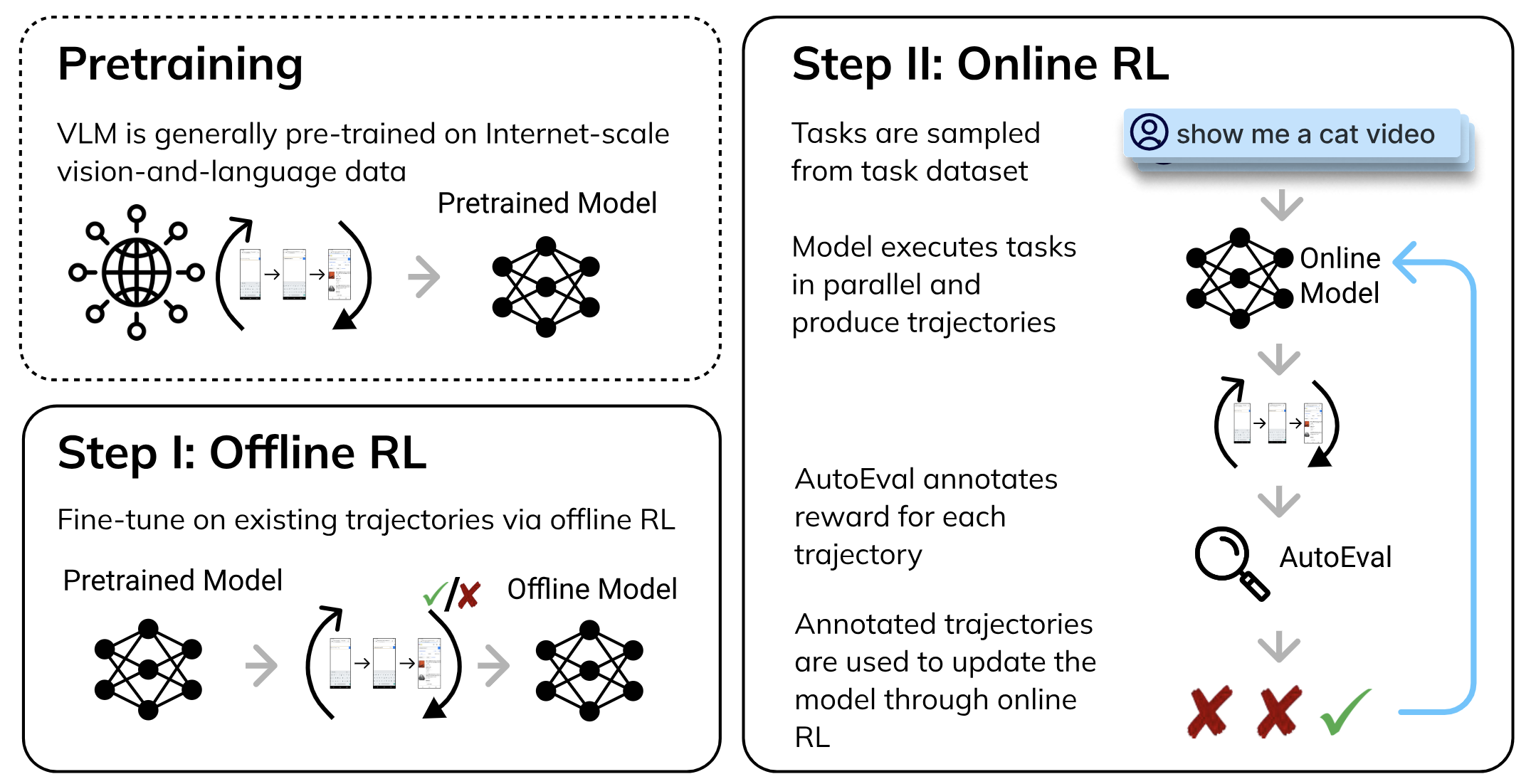

Digi-Q achieves superior performance compared to other state-of-the-art RL baselines using historically collect data. Surprisingly, it is even comparable to the strongest online RL baseline DigiRL , without relying on costly online simulations! Please refer to our paper for more analysis results.